Markov chains are kind of like state machines; with a probability attached to each transition. Each state has no memory of previous states. They have plenty of applications but a very common one is generating realistic text – for example, fooling Bayesian spam filters.

I’ve had a long standing desire to make a Twitter bot using Markov chains; perhaps to make up for the lack of my own tweets! The plan is pretty easy, we need to build our model, produce some output; and use the Twitter API to post it.

The theory behind building the model is simple. If we take a sample corpus; for example the first paragraph of this blog post; we can analyse the text to see that if the current letter is a then the probability of the next letter being an r is 0.15; the probability of it being a t is 0.2; the probability of it being an i is 0.1 and so on.

This can then be extended to pairs of letters; or even words. Then; by walking the resulting Markov chain, we can mimic the style of the writer. From this description; it’s easy to see how the size of the corpus is going to change the probabilities and affect the final result.

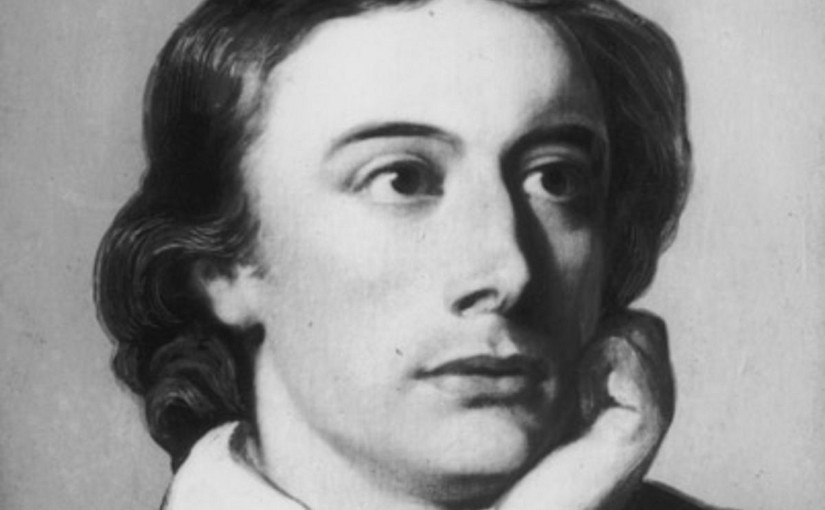

First things first – we need a reasonably large corpus from which to generate the text – I picked John Keats; hopeless romantic and lover of nightingales; which fits with a theme of tweeting. We can get hold of a few bits of Keat’s work from Project Gutenburg. Some clean up of the text is required to remove unwanted words – the preamble; line numbers and headings – otherwise these will “pollute” our corpus.

As for the code; this being the 21st Century we don’t have to do much of this ourselves. We can quickly Google a bit of Python that’ll generate the Markov chain model and use it to output some text; all courtesy of Shabda Raaj.

A first run leaves a bit to be desired; so we’ll make a few minor adjustments – we make everything lower case; and add a back off to prevent stop words like “and” or “of” appearing at the end of our sentences:

stopwords = ['and', 'of', 'with', 'the', 'a', 'which']

# backoff until no stopwords

while gen_words[-1].lower() in stopwords:

gen_words.pop()

For now, i’ve decided against stripping punctuation from the corpus; and lower casing words before they went into our Markov model. Without doing this; “day.”, “day” and “Day” are all treated as separate words; so our output has a bit less variety – often Keatsbot will lift whole sentences from the underlying corpus. What a fraud. But I think on balance it gets us closer to Keat’s style; since punctuation is of course part of that style.

Finally; we want to tweet it. Ricky Rosario helps us with this, pointing us to the excellent Python Twitter Tools. We just need to pip install twitter to download the package; then it’s as easy as:

twitter = Twitter(auth=OAuth(token, token_key, con_secret_key, con_secret)) twitter.statuses.update(status=output)

So – set up a Twitter account; add an application from the developer console to get the various OAuth keys; and we can sing of summer in full-throated ease!

You can find the full code on Github.

I did something similar a long time ago to generate new English-sounding placenames letter by letter (so long ago I think it was written in Visual Basic). It worked remarkably well – wish I knew whether I still have the code somewhere.

5 minutes of searching later …. found it and the .exe dating from 2001 still works! Hats off to Microsoft.